Host-INT brings network packet telemetry

Packet Timer

© Lead Image © Vasiliy-Yakobchuk, 123RF.com

Inband Network Telemetry and Host-INT can provide valuable insights on network performance – including information on latency and packet drops.

Hyperscale data centers are seeking more visibility into network performance for better manageability. This challenge is made more difficult as the number of switches and servers grow, and as data flows evolve to 100Gbps and higher. Knowing where network congestion is and how it is affecting data flows and service level agreements (SLAs) is critical.

Inband Network Telemetry (INT) is an open specification from the P4 open source community [1]. The goal of INT is to allow the collection and reporting of packets as they flow through the network. Intel has brought this capability to the Linux open source community with Host Inband Network Telemetry (Host-INT). The Host-INT framework can collect some very valuable data on network conditions – such as latency and packet drops – that would be very hard to obtain otherwise. Host-INT is ideal for app developers who need to know the network's impact on an application. Or, a large wide area network (WAN) service provider could use Host-INT to ensure that its service level agreements are being met.

Host-INT builds on Switch-INT, another P4 implementation that performs measurements on network packets. Both Host-INT and Switch-INT are designed to operate entirely in the data plane of a network device (e.g., in the hardware fast path of a switch ASIC or Ethernet network adapter). Switch-INT is currently running on programmable switch infrastructures such as Intel's Tofino Intelligent Fabric Processors [2]. By operating in the data plane, Switch-INT can provide extensive network telemetry without impacting performance.

How Host-INT Works

Host-INT is implemented in the Linux server, not in the switches, and it thus looks at packet flows from outside the network. The INT source node, located at the network ingress, inserts instructions and network telemetry information into the packet. The INT sink, located at the network egress, removes the INT header and metadata from the packet and sends it to the telemetry collector.

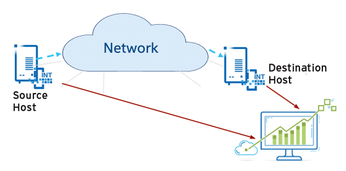

The Host-INT configuration consists of a source host, where packets are modified to include the telemetry information, and the destination host, where metadata is collected and reported to an analytics program (Figure 1). The result is that Host-INT is able to collect difficult-to-obtain performance data. A DevOps team, for example, could use Host-INT to study the impact of the network on their app's performance – and obtain much more detailed information than would be available using conventional tools like ping and traceroute.

Figure 1: Host-INT in action: The source host adds an INT header to the packet. The destination host extracts the header and reports telemetry metadata to an analytics program.

Figure 1: Host-INT in action: The source host adds an INT header to the packet. The destination host extracts the header and reports telemetry metadata to an analytics program.

Also, organizations that depend on large WANs can use the latency and packet drop data that comes from Host-INT to ensure service levels and connect early with their service provider to minimize repair time.

Host-INT Collects Metadata

The Host-INT specification describes several options for collecting additional metadata from packet headers in order to measure latency, depth of the queues that the packet passes through, and link utilization levels.

After installing and enabling Host-INT on Linux servers (check the Host-INT GitHub page [3] for more on supported Linux distributions and versions), INT headers are added to IPv4 TCP and UDP packets that are then read by another INT-enabled host on the network. For example, if you configure Host-INT on host A to add INT headers to all packets sent from host A to host B, an extended Berkeley Packet Filter (eBPF) program loaded into the Linux kernel on host A modifies all TCP and UDP packets destined for host B.

The INT header contains:

- The time when the packet was processed.

- A sequence number that starts at 1 for the first packet of each flow and increments for each subsequent packet of the flow.

Host-INT's notion of a flow is all packets with the same 5-tuple (i.e., the same combination of values for these five packet header fields):

- IPv4 source and destination address

- IPv4 protocol

- TCP/UDP source and destination port

The packet encapsulation format used in Host-INT's initial release is to add the INT headers after the packet's TCP or UDP header. In order for the receiving host B to distinguish packets that have INT headers from those that do not, host A also modifies the value of the differentiated services field codepoints (DSCP) within the IPv4 Type of Service field to a configurable value. In practice it is good to consult with a network administrator to find a DSCP value that is not in use for other purposes within the network.

When packets arrive at host B, another eBPF program running in host B's kernel processes it. If the packet has an INT header (determined by the DSCP value), the eBPF program removes it and sends the packet on to the Linux kernel networking code, where it will then proceed to the target application.

Before removing the INT header, host B's eBPF program calculates the one-way latency of the packet, calculated as the time at host B when the packet was received, minus the timestamp that host A sent in the INT header. Note that any inaccuracy in the synchronization of the clocks on host A and host B introduces an error into this one-way latency measurement. It is recommended that you use Network Time Protocol (NTP) or Precision Time Protocol (PTP) to synchronize the clocks for devices on your network.

Host B's eBPF program looks up the packet's flow (determined by the same 5-tuple of packet header fields used at the source host A) in an eBPF map. Independently, for the packets that host B receives with INT headers, it keeps the following data:

- The last time a packet was received.

- The one-way latency of the previous packet received by host B for this flow.

- A small data structure that, used in combination with the sequence number in the INT header, determines how many packets were lost in the network for this flow.

Host B will generate an INT report packet upon any of these events:

- The first packet of a new flow is received with an INT header.

- Once-per-packet drop report time period. Every flow is checked to see if any new packet drops have been detected since the previous period. If there have been new packet losses detected recently, an INT loss report packet is generated. The report contains the number of lost packets detected and the header of the packet.

- If the one-way latency of the current packet is significantly different from the latency of the previous packet for this flow, an INT latency report packet is generated.

Thus, by looking at the stream of INT report packets, Host-INT can monitor the following things:

- Every time the latency of a flow changes significantly

- Periodic updates of the lost packet count

Both of these things are reported on a per-flow basis.

Host-INT generates INT report packets that are sent from INT packet receivers back to the sending host, where the data extracted from the report is written to a text file. Adding other components expands the functionality of Host-INT. Host-INT supports extensions that send INT report packets to the host that sent the packet containing the header – and even to a pre-configured IP address.

The typical reason to use a pre-configured IP address is to send INT reports from many hosts to a telemetry analytics system that can collect, collate, analyze, and display telemetry data so operators can make insightful decisions about their network. (Intel's Deep Insight Analytics software [4] is an example of an analytics system that can process INT reports.)

Current Limitations

Although Host-INT's reports can help network administrators learn about flows experiencing high latency or many packet drops, Host-INT cannot currently help narrow down the root cause within a network for this behavior. Switch-INT, however, does have the ability to help find the root cause. If you deploy INT-capable switches on your network, you can configure those switches to generate INT reports on events such as packet drops or packet queues causing high latency. By configuring your switches properly, you can quickly determine the actual location in the network where congestion and packet drops occur. You can also determine snapshots of other packets that were in the same queue as the dropped or high-latency packets.

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Systemd Fixes Bug While Facing New Challenger in GNU Shepherd

The systemd developers have fixed a really nasty bug amid the release of the new GNU Shepherd init system.

-

AlmaLinux 10.0 Beta Released

The AlmaLinux OS Foundation has announced the availability of AlmaLinux 10.0 Beta ("Purple Lion") for all supported devices with significant changes.

-

Gnome 47.2 Now Available

Gnome 47.2 is now available for general use but don't expect much in the way of newness, as this is all about improvements and bug fixes.

-

Latest Cinnamon Desktop Releases with a Bold New Look

Just in time for the holidays, the developer of the Cinnamon desktop has shipped a new release to help spice up your eggnog with new features and a new look.

-

Armbian 24.11 Released with Expanded Hardware Support

If you've been waiting for Armbian to support OrangePi 5 Max and Radxa ROCK 5B+, the wait is over.

-

SUSE Renames Several Products for Better Name Recognition

SUSE has been a very powerful player in the European market, but it knows it must branch out to gain serious traction. Will a name change do the trick?

-

ESET Discovers New Linux Malware

WolfsBane is an all-in-one malware that has hit the Linux operating system and includes a dropper, a launcher, and a backdoor.

-

New Linux Kernel Patch Allows Forcing a CPU Mitigation

Even when CPU mitigations can consume precious CPU cycles, it might not be a bad idea to allow users to enable them, even if your machine isn't vulnerable.

-

Red Hat Enterprise Linux 9.5 Released

Notify your friends, loved ones, and colleagues that the latest version of RHEL is available with plenty of enhancements.

-

Linux Sees Massive Performance Increase from a Single Line of Code

With one line of code, Intel was able to increase the performance of the Linux kernel by 4,000 percent.