Let an AI chatbot do the work

Programming Snapshot – ChatGPT

The electronic brain behind ChatGPT from OpenAI is amazingly capable when it comes to chatting with human partners. Mike Schilli picked up an API token and has set about coding some small practical applications.

Every month just before Linux Magazine goes to press, I hold secret rites to conjure up an interesting topic at the last minute. So it is with interest that I have followed the recent meteoric rise of the AI chatbot ChatGPT [1], which – according to the alarmist press – is so smart that it will soon outrank Google as a search engine. Could this system possibly help me find new red-hot article topics that readers will snap up with gusto, greedily imbibing the wisdom I bundle into them?

The GPT in ChatGPT stands for Generative Pre-trained Transformer. The AI system analyzes incoming text, figures out what kind of information is wanted, mines appropriate responses from a massive data model trained with information from the Internet, and repackages it as a text response. Could the electronic brain possibly help me find an interesting topic for this column?

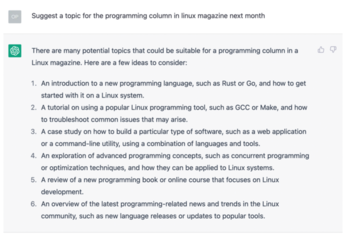

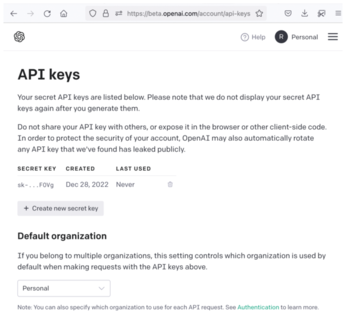

I put it to the test and directed the question to the chatbot. To do this, I typed the question in the search box of ChatGPT's website (Figure 1), and, to my amazement, the AI actually did come up with a couple of usable topics. Introductions to Rust and Go or a deep dive into parallel programming – that sounds interesting!

ELIZA 2.0

The interface feels like a modern version of ELIZA [2], the standard program from the stone age (1960) of this genre. In fact, after ELIZA, almost no progress was made in terms of AI and word processing for half a century, despite long-winded optimistic announcements. In the wake of deep learning and the associated neural networks, however, the field has experienced such a tremendous boost in the past 10 years that it is now difficult to tell whether you are chatting with a computer or a human being. Today, professional novelists [3] and newspaper writers even freely admit to letting their computer co-drivers take the wheel for wordy passages and plot development [4].

If you type questions for the bot in ChatGPT's browser interface, the system answers after a brief consideration with a jerky text flow, just like a call center person talking to an inquiring customer. The AI not only answers questions about general knowledge, but also writes entire newspaper articles on command. For example, within half a minute of the command "Write an introductory magazine article …," as shown in Figure 2, the text beneath, introducing parallel programming with Go, trickled out of the browser. The results seem amazingly professional. And you can do this in other languages, too. You might suspect that hordes of sports and financial journalists have been using similar systems for years to produce the same article formats on minor league games or stock market fluctuations by the dozen.

Terminal as a Life Coach

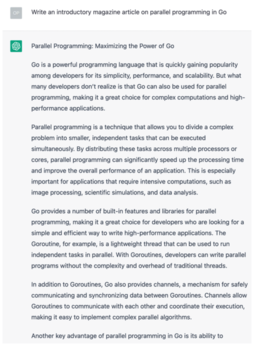

Now, if you don't want to keep signing into OpenAI.com with your email address, password, and annoying I-am-not-a-robot challenges before you can enter your requests, you can register on the developer page and retrieve an API key [5] (Figure 3). If a program provides this key as an authentication token in API requests, OpenAI.com responds via HTTP response, and simple command-line tools can snap up and print the response in the terminal. This gives you a ready-to-rumble command-line interface (CLI) to pretty much all of today's common knowledge.

Figure 3: You can get an API token for free to avoid the hassle of constantly signing into the OpenAI website.

Figure 3: You can get an API token for free to avoid the hassle of constantly signing into the OpenAI website.

The auth token for playing around is available after registration with a valid email address and phone number. OpenAI additionally offers a payment model [6], with token-based charges to your credit card. Because limits in free mode are so generous, you won't incur costs for normal use, so you can usually skip entering your credit card data in the first place.

Using the go-gpt3 package from GitHub, the Go program in Listing 1 communicates with GPT's AI model with just a few lines of code. The program sends questions – known as prompts – to the GPT server as API requests under the hood. If the API server understands the content and finds an answer, it bundles what is known as a completion into an API response and returns it to the client.

Listing 1

openai.go

01 package main

02 import (

03 "context"

04 "fmt"

05 gpt3 "github.com/PullRequestInc/go-gpt3"

06 "os"

07 )

08 type openAI struct {

09 Ctx context.Context

10 Cli gpt3.Client

11 }

12 func NewAI() *openAI {

13 return &openAI{}

14 }

15 func (ai *openAI) init() {

16 ai.Ctx = context.Background()

17 apiKey := os.Getenv("APIKEY")

18 if apiKey == "" {

19 panic("Set APIKEY=API-Key")

20 }

21 ai.Cli = gpt3.NewClient(apiKey)

22 }

23 func (ai openAI) printResp(prompt string) {

24 req := gpt3.CompletionRequest{

25 Prompt: []string{prompt},

26 MaxTokens: gpt3.IntPtr(1000),

27 Temperature: gpt3.Float32Ptr(0),

28 }

29 err := ai.Cli.CompletionStreamWithEngine(

30 ai.Ctx, gpt3.TextDavinci003Engine, req,

31 func(resp *gpt3.CompletionResponse) {

32 fmt.Print(resp.Choices[0].Text)

33 },

34 )

35 if err != nil {

36 panic(err)

37 }

38 fmt.Println("")

39 }

Listing 1 wraps the logic for communication in a constructor NewAI() and a function init() starting in line 15; this fetches the API token from the environment variable APIKEY and uses it to create a new API client in line 21. To allow other functions in the package to use the client, line 21 drops it in a structure of the openAI type defined in line 8. The constructor gives the calling program a pointer to the structure, and Go's receiver mechanism includes this with the function calls.

Object Orientation in Go

The context object created in line 16 is used to remotely control the client if it times out or otherwise cancels an active request. This is not needed in the simple application in Listing 1, but the gpt3 library needs it anyway, so line 16 also stores the context in the OpenAI structure so that the printResp() function can access it later, starting in line 23.

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Systemd Fixes Bug While Facing New Challenger in GNU Shepherd

The systemd developers have fixed a really nasty bug amid the release of the new GNU Shepherd init system.

-

AlmaLinux 10.0 Beta Released

The AlmaLinux OS Foundation has announced the availability of AlmaLinux 10.0 Beta ("Purple Lion") for all supported devices with significant changes.

-

Gnome 47.2 Now Available

Gnome 47.2 is now available for general use but don't expect much in the way of newness, as this is all about improvements and bug fixes.

-

Latest Cinnamon Desktop Releases with a Bold New Look

Just in time for the holidays, the developer of the Cinnamon desktop has shipped a new release to help spice up your eggnog with new features and a new look.

-

Armbian 24.11 Released with Expanded Hardware Support

If you've been waiting for Armbian to support OrangePi 5 Max and Radxa ROCK 5B+, the wait is over.

-

SUSE Renames Several Products for Better Name Recognition

SUSE has been a very powerful player in the European market, but it knows it must branch out to gain serious traction. Will a name change do the trick?

-

ESET Discovers New Linux Malware

WolfsBane is an all-in-one malware that has hit the Linux operating system and includes a dropper, a launcher, and a backdoor.

-

New Linux Kernel Patch Allows Forcing a CPU Mitigation

Even when CPU mitigations can consume precious CPU cycles, it might not be a bad idea to allow users to enable them, even if your machine isn't vulnerable.

-

Red Hat Enterprise Linux 9.5 Released

Notify your friends, loved ones, and colleagues that the latest version of RHEL is available with plenty of enhancements.

-

Linux Sees Massive Performance Increase from a Single Line of Code

With one line of code, Intel was able to increase the performance of the Linux kernel by 4,000 percent.