Bonding your network adapters for better performance

Together

© Photo by Andrew Moca on Unsplash

Combining your network adapters can speed up network performance – but a little more testing could lead to better choices.

I recently bought a used HP Z840 workstation to use as a server for a Proxmox [1] virtualization environment. The first virtual machine (VM) I added was an Ubuntu Server 22.04 LTS instance with nothing on it but the Cockpit [2] management tool and the WireGuard [3] VPN solution. I planned to use WireGuard to connect to my home network from anywhere, so that I can back up and retrieve files as needed and manage the other devices in my home lab. WireGuard also gives me the ability to use those sketchy WiFi networks that you find at cafes and in malls with less worry about someone snooping on my traffic.

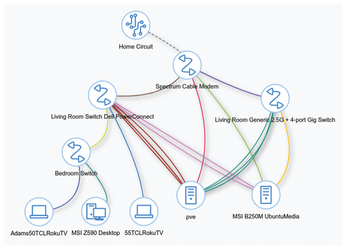

The Z840 has a total of seven network interface cards (NICs) installed: two on the motherboard and five more on two separate add-in cards. My second server with a backup WireGuard instance has 4 gigabit NICs in total. Figure 1 is a screenshot from NetBox that shows how everything is connected to my two switches and the ISP-supplied router for as much redundancy as I can get from a single home network connection.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Parrot OS Switches to KDE Plasma Desktop

Yet another distro is making the move to the KDE Plasma desktop.

-

TUXEDO Announces Gemini 17

TUXEDO Computers has released the fourth generation of its Gemini laptop with plenty of updates.

-

Two New Distros Adopt Enlightenment

MX Moksha and AV Linux 25 join ranks with Bodhi Linux and embrace the Enlightenment desktop.

-

Solus Linux 4.8 Removes Python 2

Solus Linux 4.8 has been released with the latest Linux kernel, updated desktops, and a key removal.

-

Zorin OS 18 Hits over a Million Downloads

If you doubt Linux isn't gaining popularity, you only have to look at Zorin OS's download numbers.

-

TUXEDO Computers Scraps Snapdragon X1E-Based Laptop

Due to issues with a Snapdragon CPU, TUXEDO Computers has cancelled its plans to release a laptop based on this elite hardware.

-

Debian Unleashes Debian Libre Live

Debian Libre Live keeps your machine free of proprietary software.

-

Valve Announces Pending Release of Steam Machine

Shout it to the heavens: Steam Machine, powered by Linux, is set to arrive in 2026.

-

Happy Birthday, ADMIN Magazine!

ADMIN is celebrating its 15th anniversary with issue #90.

-

Another Linux Malware Discovered

Russian hackers use Hyper-V to hide malware within Linux virtual machines.